Your Words Could Land You in Cuffs: AI and Rogue Governments Are Crushing Free Speech

- Lynn Matthews

- Sep 3, 2025

- 2 min read

Arrested for a Misunderstood Post

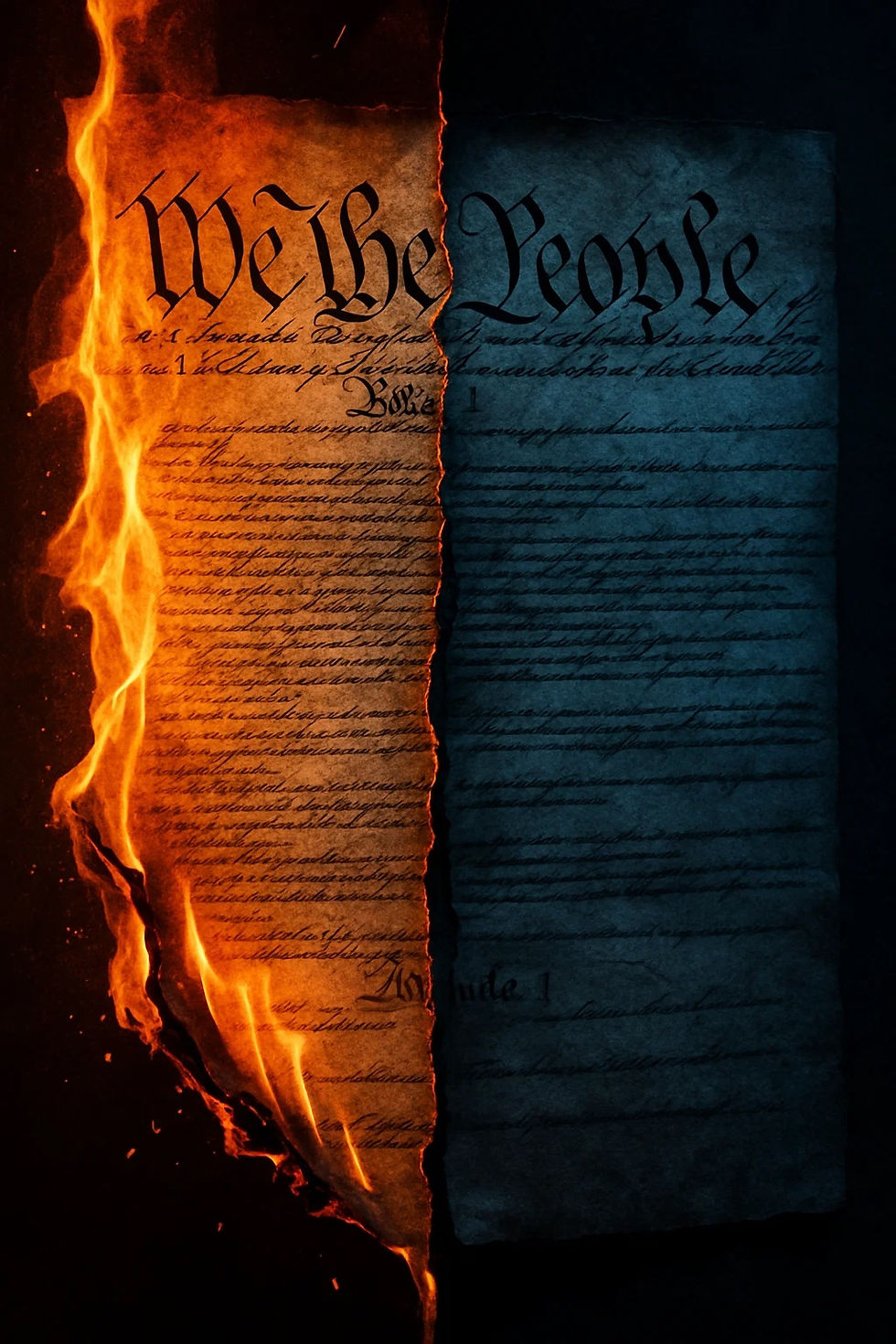

In Dearborn, Michigan, police arrested 27-year-old Anthony James Young from Garden City for posting: “Someone should show up and let a couple of clips out.” Was he talking about video clips for the Arba’een march? Maybe. Police assumed gunfire and cuffed him within hours on August 16, 2025. No guns were found, no plan existed, no threat was real. Yet he’s out on a $5,000 bond, tethered, banned from the internet, and awaiting trial on September 17. This isn’t a fluke—it’s a five-alarm fire. Free speech is burning, and AI like ChatGPT is pouring fuel on the flames.

The First Amendment Is on Its Knees

The First Amendment protects your right to speak, even if it’s vague or provocative. Only true threats—specific plans with means to act—lose protection. A post about “clips,” with no weapons or intent, should be safe. But Dearborn police pounced, twisting ambiguity into a crime. Now imagine AI like ChatGPT scanning your posts. Say “drop some clips” or “so-and-so needs to go,” and you could wake up to sirens. No context, no intent—just punishment.

AI: The Speech-Snitching Machine

OpenAI’s ChatGPT uses hidden algorithms to flag “harmful” language—like “clips” or “fight”—for human review and possible police reports. It’s sold as safety, but it’s a trap. AI can’t grasp slang, hyperbole, or context. “Clips” for videos? To AI, it’s violence. OpenAI hides its flagging criteria and error rates, leaving you defenseless. One misread post, and you’re in a police database—or worse. This isn’t protection; it’s a digital spy ready to betray you.

A Rogue Government’s Ultimate Weapon

Picture a government like the Biden administration, which targeted pro-life activists, Christians, and gun owners, hijacking AI. A regime hostile to free speech could tweak ChatGPT to flag “dangerous” views—posts about gun rights, traditional values, or protests. Your opinion becomes a crime. The UK already logs “non-crime hate incidents” for legal speech. Here, AI could make that dystopia real overnight, silencing pro-lifers, Christians, or anyone who speaks out.

The Clock Is Ticking

Anthony Young’s arrest is your warning shot. His “clips” post—maybe about videos, maybe not—got him cuffed despite no guns or plan. AI systems like ChatGPT, blindly flagging vague words, are a loaded gun aimed at your free speech. If a rogue government takes control, it’ll fire, targeting entire groups for their beliefs. Every post you make is a gamble.

Fight Back Before Your Voice Is Silenced

Your freedom to speak is on the chopping block. Demand OpenAI reveal how ChatGPT flags speech and its error rates. Push for laws protecting vague or provocative speech from punishment. Share this warning on X, Facebook or your preferred social media platform with friends, everywhere. Because if AI and a rogue government start policing your words, the First Amendment is dead—and you could be next for saying “clips.”

Comments